In the past week or more I have been busily building out a new demo lab that Cohesity will be using at trade shows as our live demo environment. Of course this has involved a lot of new gear and a greenfield deployment. In fact we are basing much of the design on how our team built the Cohesity On Wheels Mobile EBC, which has saved some time. However, in the process we have not only taken those learnings and made improvements, we also discovered some things specific to the networking stacks. Simply put, my design goal was to ensure that all Cohesity backup traffic was forced to a specific VLAN and in turn vSphere Kernel Port.

This may already look familiar to you, but this information should help some other customers and partners.

Understanding Cohesity’s Bond0 Connection

For most implementations I have seen on the Cohesity side, people rarely bother with the 1G connections and generally use the node’s 10G ports. So for the purpose of this discussion, let’s assume that is the case, and for reference those ports represent BOND0.

The most common configuration at deployment is that the customer configures the switch ports for BOND0 to whatever native VLAN they want to use for management. This may or may not be the same location VLAN as your vSphere hosts, which may matter later. So let’s start laying out the basic Cohesity configuration for my deployment.

- BOND0 = Native Routed VLAN10 (Management and default system gateway)

- 10.10.96.0/24

- BOND0.40 = Tagged Backup VLAN40 Non-Routed

- 172.16.40.0/24

- BOND0.50 = Tagged vSphere NFS VLAN50 Non-Routed

- 172.16.50.0/24

Now I should point out that all these VLANs will accept traffic for NFS, SMB, and S3 if you have connectivity to them. In general, most clients would access the main IP on BOND0 as it is routable, with the exception of direct kernel access by vSphere on the other ones. I won’t go into the node IPs and the various VIPs that also reside on each BOND0 interface that are documented pretty well.

The vSphere Kernel Ports

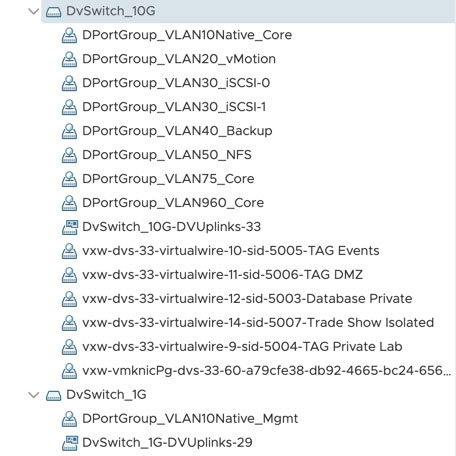

This is where things in our new build helped us work out the solution we were looking for. On our Dell Servers, we decided to create two Distributed Switches. One uses the 1G connections strictly for management and the other uses the 10G connections for everything else. Of course our management kernel IP address is registered in DNS. Below is what the distributed switches and the kernel port configurations look like.

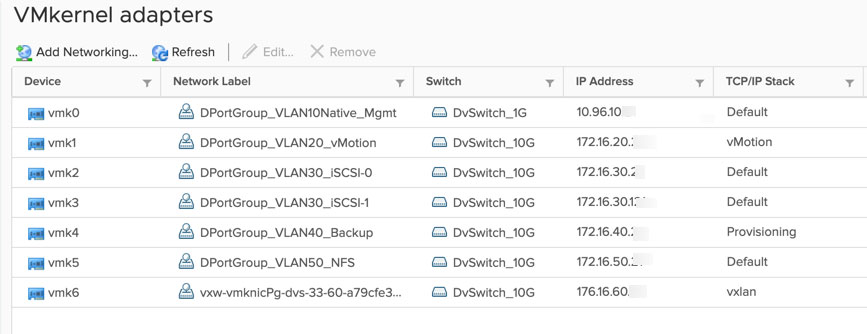

Each host is connected to each DVS with both its 1G and 10G ports respectively, and each port group has various NIC teaming policies configured to balance the traffic. The 10G switch also has Network IO control (NIOC) enabled. Now let’s look at the various kernel ports on a single host.

As you can see, the only kernel port on the 1G switch is the management port and we have various others on the 10G side for vMotion, NFC, NSX, and most importantly backup. When we connected the hosts to vCenter, we used their FQDN, which of course will resolve to the 1G connected kernel ports.

Cohesity Backup Jobs

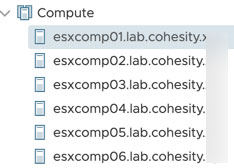

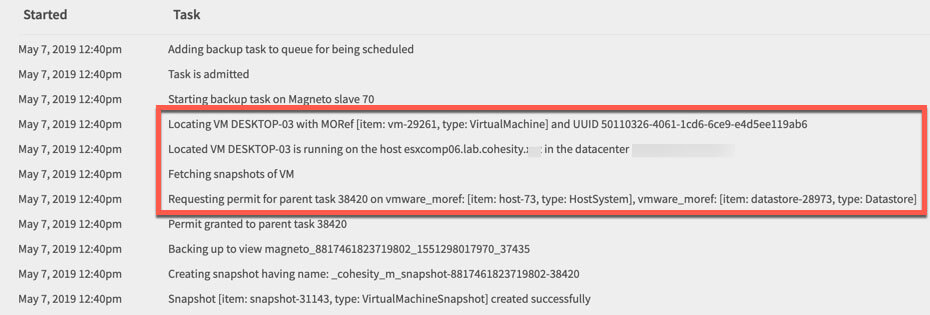

Here is where things got a little interesting. Still being a bit new to the Cohesity side of things, I started running some backups and noticed that things seemed oddly slow initially. I realized I was not exactly sure how or where the Cohesity software was calling vSphere backups. So, I did what anyone would do and I checked the logs which clearly told me where a machine was located.

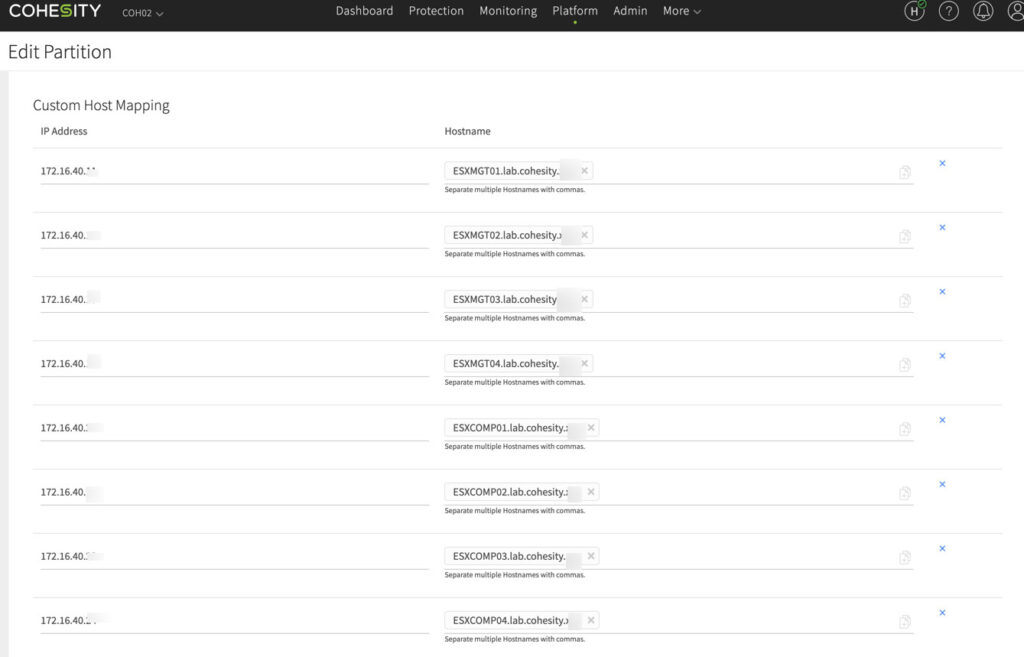

While a large job was running, I went over to the identified host’s networking stats and clearly saw a spike in traffic on the NICs associated with the 1G distributed switch. Now I was onto something. Cohesity queries the vCenter for the location, then connects to that location using DNS, which was resolving to the management port within the plumbing. After a call with a good friend Brock Mowry, we did a little digging and confirmed that we were not in fact using the backup network. So how did we resolve this and move all that traffic to the right ports? Easy – by using the Cohesity Custom Host Mappings page located under “Edit Partition”.

What you can see is we can essentially create local mappings for the same hostname that was in DNS, but now it’s a local host file entry pointing to the IP address of the kernel port on VLAN40, our backup network. This is easily available in the user interface, and once these are added, nothing else is required. When we re-ran the backups, we could observe the traffic clearly on the 10G side and the backup time was drastically improved on the 10G ports.

Summary

I have long been a proponent of making sure you understand the plumbing in your architecture. Not only from an NSX side of things, but at the core vSphere level too. Being able to fully understand your architecture, as well as the integration and other communication paths, will help you improve your use of Cohesity. While not everyone still uses the 1G ports for management, many people do. So if you are among those people still utilizing 1G ports like I do, you might want to take a peek just to make sure you are optimized for backup traffic using the power of Cohesity’s high performance distributed backup processes.